Self-organizing mixture models

Abstract

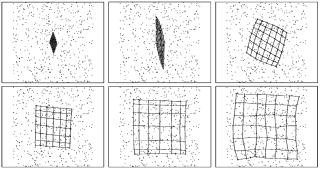

We present an expectation-maximization (EM) algorithm that yields topology preserving maps of data based on probabilistic mixture models. Our approach is applicable to any mixture model for which we have a normal EM algorithm. Compared to other mixture model approaches to self-organizing maps (SOMs), the function our algorithm maximizes has a clear interpretation: it sums data log-likelihood and a penalty term that enforces self-organization. Our approach allows principled handling of missing data and learning of mixtures of SOMs. We present example applications illustrating our approach for continuous, discrete, and mixed discrete and continuous data.

Domains

Machine Learning [cs.LG]

Fichier principal

verbeek03neuro_final.pdf (859.02 Ko)

Télécharger le fichier

verbeek03neuro_final.pdf (859.02 Ko)

Télécharger le fichier

VVK05.png (52.38 Ko)

Télécharger le fichier

VVK05.png (52.38 Ko)

Télécharger le fichier

Origin : Files produced by the author(s)

Format : Figure, Image

Loading...