Weighted Transmedia Relevance Feedback for Image Retrieval and Auto-annotation

Résumé

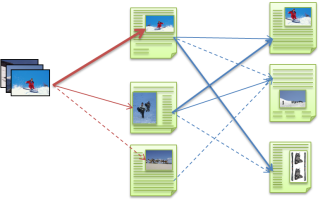

Currently large scale multimodal image databases have become widely available, for example via photo sharing sites where images come along with textual descriptions and keyword annotations. Most existing work on image retrieval and image auto-annotation has considered uni-modal techniques, either focusing on query-by-example systems or query-by-text systems for image retrieval, and mono modal classification for image auto-annotation. However recent state-of-the-art multimodal image retrieval and image auto-annotation systems combine different uni-modal models using late-fusion techniques. In addition, significant advances have been made by using pseudo-relevance feedback techniques, as well as using transmedia relevance models that swap modalities in the query expansion step of pseudo-relevance methods. While these techniques are promising it is not trivial to set the parameters that control the late fusion and pseudo/cross relevance models. In this paper, we therefore propose approaches to learn these parameters from a labeled training set: queries with relevant and non-relevant documents, or images with relevant and non-relevant keywords. Three additional contributions are the introduction of (i) two new parameterizations of transmedia and pseudo-relevance models, (ii) correction parameters for inter-query variations in the distribution of retrieval scores for both relevant and non-relevant documents, and (iii) the extension of TagProp, a nearest neighbor based image annotation method to exploit transmedia relevance feedback. We evaluate our models using public benchmark data sets for image retrieval and annotation. Using the data set of the ImageClef 2008 Photo Retrieval task, our retrieval experiments show that our learned models lead to significant improvements of retrieval performance over the current state-of-the-art. In our experiments on image annotation we use the COREL and IAPR data sets, and also here we observe annotation accuracies that improve over the current state-of-the-art results on these data sets.

Fichier principal

RT-0415.pdf (1.52 Mo)

Télécharger le fichier

RT-0415.pdf (1.52 Mo)

Télécharger le fichier

figure1.png (101.19 Ko)

Télécharger le fichier

figure1.png (101.19 Ko)

Télécharger le fichier

Origine : Fichiers produits par l'(les) auteur(s)

Format : Figure, Image

Loading...