Accelerated greedy mixture learning

Résumé

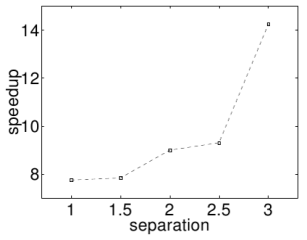

Mixture probability densities are popular models that are used in several data mining and machine learning applications, e.g., clustering. A standard algorithm for learning such models from data is the Expectation-Maximization (EM) algorithm. However, EM can be slow with large datasets, and therefore approximation techniques are needed. In this paper we propose a variational approximation to the greedy EM algorithm which oers speedups that are at least linear in the number of data points. Moreover, by strictly increasing a lower bound on the data log-likelihood in every learning step, our algorithm guarantees convergence. We demonstrate the proposed algorithm on a synthetic experiment where satisfactory results are obtained.

Domaines

Apprentissage [cs.LG]

Fichier principal

verbeek04bnl.pdf (107.57 Ko)

Télécharger le fichier

verbeek04bnl.pdf (107.57 Ko)

Télécharger le fichier

NVV04.png (11.79 Ko)

Télécharger le fichier

NVV04.png (11.79 Ko)

Télécharger le fichier

Origine : Fichiers produits par l'(les) auteur(s)

Format : Figure, Image

Loading...