Understanding Everyday Hands in Action from RGB-D Images

Abstract

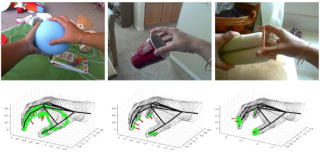

We analyze functional manipulations of handheld objects, formalizing the problem as one of fine-grained grasp classification. To do so, we make use of a recently developed fine-grained taxonomy of human-object grasps. We introduce a large dataset of 12000 RGB-D images covering 71 everyday grasps in natural interactions. Our dataset is different from past work (typically addressed from a robotics perspective) in terms of its scale, diversity, and combination of RGB and depth data. From a computer-vision perspective , our dataset allows for exploration of contact and force prediction (crucial concepts in functional grasp analysis) from perceptual cues. We present extensive experimental results with state-of-the-art baselines, illustrating the role of segmentation, object context, and 3D-understanding in functional grasp analysis. We demonstrate a near 2X improvement over prior work and a naive deep baseline, while pointing out important directions for improvement.

Fichier principal

1803.pdf (9.26 Mo)

Télécharger le fichier

1803.pdf (9.26 Mo)

Télécharger le fichier

screenshot.jpg (52.67 Ko)

Télécharger le fichier

screenshot.jpg (52.67 Ko)

Télécharger le fichier

Origin : Files produced by the author(s)

Format : Figure, Image

Origin : Files produced by the author(s)

Origin : Files produced by the author(s)

Loading...