Predicting Deeper into the Future of Semantic Segmentation

Résumé

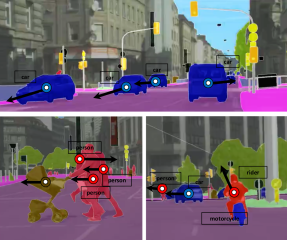

The ability to predict and therefore to anticipate the future is an important attribute of intelligence. It is also of utmost importance in real-time systems, e.g. in robotics or autonomous driving, which depend on visual scene understanding for decision making. While prediction of the raw RGB pixel values in future video frames has been studied in previous work, here we introduce the novel task of predicting semantic segmentations of future frames. Given a sequence of video frames, our goal is to predict segmentation maps of not yet observed video frames that lie up to a second or further in the future. We develop an autoregressive convolutional neural network that learns to iteratively generate multiple frames. Our results on the Cityscapes dataset show that directly predicting future segmentations is substantially better than predicting and then segmenting future RGB frames. Prediction results up to half a second in the future are visually convincing and are much more accurate than those of a baseline based on warping semantic segmentations using optical flow.

Fichier principal

0270.pdf (3.97 Mo)

Télécharger le fichier

0270.pdf (3.97 Mo)

Télécharger le fichier

HAL-logo.png (768.86 Ko)

Télécharger le fichier

0270-supp.pdf (2.59 Mo)

Télécharger le fichier

17results.gif (135.86 Ko)

Télécharger le fichier

HAL-logo.png (768.86 Ko)

Télécharger le fichier

0270-supp.pdf (2.59 Mo)

Télécharger le fichier

17results.gif (135.86 Ko)

Télécharger le fichier

17results.jpg (6.54 Ko)

Télécharger le fichier

17results.jpg (6.54 Ko)

Télécharger le fichier

HAL-logo.jpg (111.21 Ko)

Télécharger le fichier

HAL-logo.jpg (111.21 Ko)

Télécharger le fichier

Origine : Fichiers produits par l'(les) auteur(s)

Format : Figure, Image

Origine : Fichiers produits par l'(les) auteur(s)

Origine : Fichiers produits par l'(les) auteur(s)

Origine : Fichiers produits par l'(les) auteur(s)

Origine : Fichiers produits par l'(les) auteur(s)

Format : Figure, Image

Origine : Fichiers produits par l'(les) auteur(s)

Origine : Fichiers produits par l'(les) auteur(s)

Format : Figure, Image

Origine : Fichiers produits par l'(les) auteur(s)

Origine : Fichiers produits par l'(les) auteur(s)