Efficient greedy learning of Gaussian mixtures

Résumé

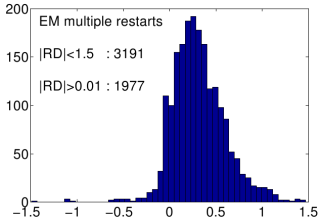

We present a deterministic greedy method to learn a mixture of Gaussians. The key element is that we build-up the mixture component-wise: we start with one component and then add new components one at a time and update the mixtures in between the component insertions. Instead of solving directly a optimization problem involving the parameters of all components, we replace the problem by a sequence of component allocation problems involving only the parameters of the new component. Included are experimental results obtained from extensive tests on artificially generated data sets. The new learning method is compared with the standard EM with random initializations approach as well as to other existing approaches to learning Gaussian mixtures.

Domaines

Apprentissage [cs.LG]

Fichier principal

verbeek01bnaic.pdf (168.9 Ko)

Télécharger le fichier

verbeek01bnaic.pdf (168.9 Ko)

Télécharger le fichier

VVK01b.png (22.22 Ko)

Télécharger le fichier

VVK01b.png (22.22 Ko)

Télécharger le fichier

Origine : Fichiers produits par l'(les) auteur(s)

Format : Figure, Image